用于數字分類的‘受限玻爾茲曼機’功能?

對于灰度圖像數據,其中像素值可以解釋為白色背景上的黑度,例如手寫數字識別,Bernoulli受限Boltzmann機器模型(BernoulliRBM)可以執行有效的非線性特征提取。

為了從一個小的數據集中學到良好的潛在表示,我們通過在每個方向上線性移動1個像素的擾動訓練數據來人工生成更多的標記數據。

本示例說明如何使用BernoulliRBM特征提取器和LogisticRegression分類器構建分類管道。 整個模型的超參數(學習率,隱藏層大小,正則化)已通過網格搜索進行了優化,但由于運行時限制,此處未復制搜索。

提出了對原始像素值的邏輯回歸以進行比較。 該示例表明,BernoulliRBM提取的特征有助于提高分類準確性。

print(__doc__)

# 作者: Yann N. Dauphin, Vlad Niculae, Gabriel Synnaeve

# 執照: BSD

import numpy as np

import matplotlib.pyplot as plt

from scipy.ndimage import convolve

from sklearn import linear_model, datasets, metrics

from sklearn.model_selection import train_test_split

from sklearn.neural_network import BernoulliRBM

from sklearn.pipeline import Pipeline

from sklearn.base import clone

# #############################################################################

# Setting up

def nudge_dataset(X, Y):

"""

將X中的8x8圖像左右,左右,向下,向上移動1px

這樣產生的數據集比原始數據集大5倍

"""

direction_vectors = [

[[0, 1, 0],

[0, 0, 0],

[0, 0, 0]],

[[0, 0, 0],

[1, 0, 0],

[0, 0, 0]],

[[0, 0, 0],

[0, 0, 1],

[0, 0, 0]],

[[0, 0, 0],

[0, 0, 0],

[0, 1, 0]]]

def shift(x, w):

return convolve(x.reshape((8, 8)), mode='constant', weights=w).ravel()

X = np.concatenate([X] +

[np.apply_along_axis(shift, 1, X, vector)

for vector in direction_vectors])

Y = np.concatenate([Y for _ in range(5)], axis=0)

return X, Y

# Load Data

X, y = datasets.load_digits(return_X_y=True)

X = np.asarray(X, 'float32')

X, Y = nudge_dataset(X, y)

X = (X - np.min(X, 0)) / (np.max(X, 0) + 0.0001) # 0-1 scaling

X_train, X_test, Y_train, Y_test = train_test_split(

X, Y, test_size=0.2, random_state=0)

# 我們要使用的模型:

logistic = linear_model.LogisticRegression(solver='newton-cg', tol=1)

rbm = BernoulliRBM(random_state=0, verbose=True)

rbm_features_classifier = Pipeline(

steps=[('rbm', rbm), ('logistic', logistic)])

# #############################################################################

# 訓練

# 超參數,這些是使用GridSearchCV通過交叉驗證設置的。

# 在這里,我們不執行交叉驗證以節省時間。

rbm.learning_rate = 0.06

rbm.n_iter = 10

# 更多的組件傾向于提供更好的預測性能,但擬合時間更長

rbm.n_components = 100

logistic.C = 6000

# 培訓RBM-Logistic管道

rbm_features_classifier.fit(X_train, Y_train)

# 直接在像素上訓練Logistic回歸分類器

raw_pixel_classifier = clone(logistic)

raw_pixel_classifier.C = 100.

raw_pixel_classifier.fit(X_train, Y_train)

# #############################################################################

# 評估

Y_pred = rbm_features_classifier.predict(X_test)

print("Logistic regression using RBM features:\n%s\n" % (

metrics.classification_report(Y_test, Y_pred)))

Y_pred = raw_pixel_classifier.predict(X_test)

print("Logistic regression using raw pixel features:\n%s\n" % (

metrics.classification_report(Y_test, Y_pred)))

# #############################################################################

# Plotting

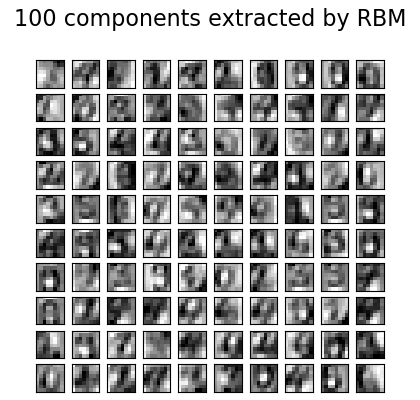

plt.figure(figsize=(4.2, 4))

for i, comp in enumerate(rbm.components_):

plt.subplot(10, 10, i + 1)

plt.imshow(comp.reshape((8, 8)), cmap=plt.cm.gray_r,

interpolation='nearest')

plt.xticks(())

plt.yticks(())

plt.suptitle('100 components extracted by RBM', fontsize=16)

plt.subplots_adjust(0.08, 0.02, 0.92, 0.85, 0.08, 0.23)

plt.show()

輸出:

[BernoulliRBM] Iteration 1, pseudo-likelihood = -25.39, time = 0.26s

[BernoulliRBM] Iteration 2, pseudo-likelihood = -23.77, time = 0.35s

[BernoulliRBM] Iteration 3, pseudo-likelihood = -22.94, time = 0.35s

[BernoulliRBM] Iteration 4, pseudo-likelihood = -21.91, time = 0.35s

[BernoulliRBM] Iteration 5, pseudo-likelihood = -21.69, time = 0.34s

[BernoulliRBM] Iteration 6, pseudo-likelihood = -21.06, time = 0.35s

[BernoulliRBM] Iteration 7, pseudo-likelihood = -20.89, time = 0.34s

[BernoulliRBM] Iteration 8, pseudo-likelihood = -20.64, time = 0.36s

[BernoulliRBM] Iteration 9, pseudo-likelihood = -20.36, time = 0.35s

[BernoulliRBM] Iteration 10, pseudo-likelihood = -20.09, time = 0.34s

Logistic regression using RBM features:

precision recall f1-score support

0 0.99 0.98 0.99 174

1 0.91 0.93 0.92 184

2 0.94 0.96 0.95 166

3 0.96 0.90 0.93 194

4 0.97 0.94 0.96 186

5 0.91 0.92 0.92 181

6 0.98 0.97 0.97 207

7 0.94 0.98 0.96 154

8 0.91 0.90 0.90 182

9 0.87 0.91 0.89 169

accuracy 0.94 1797

macro avg 0.94 0.94 0.94 1797

weighted avg 0.94 0.94 0.94 1797

Logistic regression using raw pixel features:

precision recall f1-score support

0 0.90 0.92 0.91 174

1 0.60 0.58 0.59 184

2 0.76 0.85 0.80 166

3 0.78 0.79 0.78 194

4 0.82 0.84 0.83 186

5 0.76 0.76 0.76 181

6 0.90 0.87 0.89 207

7 0.85 0.88 0.87 154

8 0.67 0.58 0.62 182

9 0.75 0.76 0.75 169

accuracy 0.78 1797

macro avg 0.78 0.78 0.78 1797

weighted avg 0.78 0.78 0.78 1797

腳本的總運行時間:(0分鐘8.949秒)